My research focuses on Learning Representations—developing cutting-edge algorithms with optimization theory to push AI’s limits. I work with advanced models like GANs and Diffusion Models, leverage Self-Supervised Learning, explore how Adversarial Attacks on Large Language Models (LLMs) could reshape the future of AI.

I am currently a Senior Research Scientist at MARSAIL, a C2F High-Potential Postdoc at Chulalongkorn University and Adjunct Professor at Khon Kaen University. I received my Ph.D. in Computer Engineering from Chulalongkorn University, where I specialized in AI. On top of that, I’m the founder of PBY Laboratory.

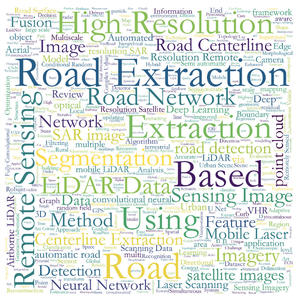

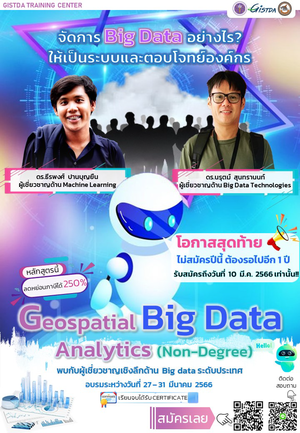

Passionate about Cognitive Intelligence and unlocking human potential, I’m also deeply immersed in Geospatial Intelligence, where LLMs uncover groundbreaking insights that reshape how we understand and interact with our world.

Detailed summaries of my academic, industry, and teaching experience can be found in my CV or IEEE Biography, and get a glimpse into my personal life on my blog and tumblr. By the way, feel free to vibe to my music on SoundCloud and my UTMB World.

Thai name: ธีรพงศ์ ปานบุญยืน, aka Kao Panboonyuen, or just Kao (เก้า).

- Applied Earth Observations

- Geoscience and Remote Sensing

- Computer Vision

- Semantic Distillation

- Human-AI Interaction

- Learning Representations

-

PostDoc Fellow in AI, 2026

Chulalongkorn University

-

PhD in Computer Engineering, 2020

Chulalongkorn University

-

MEng in Computer Engineering, 2017

Chulalongkorn University

-

BEng in Computer Engineering, 2015

KMUTNB (Top 1% in University Mathematics)

-

Pre-Engineering School (PET21), 2012

KMUTNB (Senior High School, 10th - 12th Grade)

Selected Awards

- H.M. the King Bhumibhol Adulyadej’s 72nd Birthday Anniversary Scholarship (Master) (Recipients)

- The 100th Anniversary Chulalongkorn University Fund for Doctoral Scholarship (Ph.D.) (Recipients)

- The 90th Anniversary of Chulalongkorn University Scholarship (Ph.D.)

- Postdoctoral Grant, Ratchadapisek Research Fund (RRF) (Chulalongkorn University) (Postdoc, 2021-2025) (Recipients)

- Postdoctoral Research Grant, Second Century Fund (C2F) (Chulalongkorn University) (Postdoc, 2025-2026) (Recipients) (C2F Certificate)

- Top 1% Score in University Differential Calculus and Engineering Mathematics

- 2016 EBA Disaster Management Fieldwork. International fieldwork on big data applications, hosted by Keio University, Japan (EBA)

- 2017 Best Student Paper Award in International Conference on Computing and Information Technology (IC2IT)

- 2019 Best Young Researcher Paper Award in First International Conference on Smart Technology & Urban Development (STUD)

- 2020 Ph.D. Defense Pass. Proudly completed my doctoral journey, advancing the frontier of geospatial AI with FusionNetGeoLabel (Ph.D. Defense Slides)

- 2022 Bangkok Marathon 42.195K Finisher with successfully completed a full marathon run (42.195 kilometers) (Bangkok Marathon)

- 2023 AI Research Featured in Techsauce News. Grateful to be spotlighted for pushing the frontier of AI innovation. (Techsauce).

- 2024 IRONMAN 70.3 Finisher with successfully completed a challenging triathlon consisting of a 1.9K swim, 90K bike ride, and 21.1K run (IM70.3)

- 2024 Laguna Phuket Triathlon Finisher with successfully completed a challenging triathlon consisting of a 1.8K swim, 55K bike ride, and 12K run (LPT)

- 2024 Distinguished Reviewer for the Bronze Level of IEEE Transactions on Medical Imaging (Certificate)

- 2025 Chombueng Marathon 42.195K Finisher with successfully completed a full marathon run (42.195 kilometers) (Chombueng Marathon)

- 2025 UTMB Word Series INTHANON20 Finisher with successfully completed a 20K trail run in the UTMB World Series (UTMB Chiang Mai)

- 2025 My 25th Blood Donation marks a milestone of compassion, generosity, and giving back to society, helping fellow humans (Thai Red Cross Society).

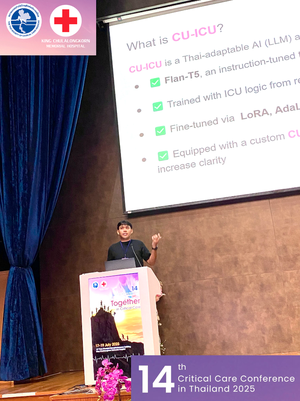

- 2025 Oral Presentation – Selected Among Top 12.5% Abstracts at the 14th Critical Care Conference, organized by the Thai Society of Critical Care Medicine (TSCCM)

- 2025 Global Young Scientists Summit (GYSS) Scholarship from Her Royal Highness Princess Maha Chakri Sirindhorn (GYSS)

Reviewer for International Journals/Conferences:

- Invited Reviewer of Pattern Recognition (Elsevier)

- Invited Reviewer of Neurocomputing (Elsevier)

- Invited Reviewer of Transactions on Knowledge Discovery from Data (ACM)

- Invited Reviewer of Computer Vision and Image Understanding (Elsevier)

- Invited Reviewer of Computers and Geosciences (Elsevier)

- Invited Reviewer of Neural Networks (Elsevier) (Certificate)

- Invited Reviewer of Remote Sensing (MDPI)

- Invited Reviewer of Artificial Intelligence Review (Nature Portfolio)

- Invited Reviewer of Scientific Reports (Nature Portfolio) (Certificate)

- Invited Reviewer of GIScience & Remote Sensing (Taylor & Francis)

- Invited Reviewer of European Journal of Remote Sensing (Taylor & Francis)

- Invited Reviewer of International Journal of Remote Sensing (Taylor & Francis)

- Invited Reviewer of IEEE Transactions on Artificial Intelligence (IEEE)

- Invited Reviewer of IEEE Transactions on Big Data (IEEE)

- Invited Reviewer of IEEE Transactions on Image Processing (IEEE)

- Invited Reviewer of IEEE Transactions on Medical Imaging (IEEE) (Certificate)

- Invited Reviewer of IEEE Transactions on Geoscience and Remote Sensing (IEEE)

- Invited Reviewer of Pattern Analysis and Machine Intelligence (TPAMI) (IEEE)

- Recognized as an IOP Trusted Reviewer (IOP Publishing)

- More reviews can be found under my WoS ID AAO-4985-2020.

- More certificates of reviewers can be found at my GitHub Repository.

Additional Certifications in Research Ethics:

- My certificate in GCP (Good Clinical Practice) – Ethics in Human Research is available in my GCP Certificate (English) and my GCP Certificate (Thai).

Editor for International Journals/Conferences:

-

I serve as an Editorial Board Member / Editor for Discover Artificial Intelligence (Springer). My editorial training certificates are available here:

- Welcome Course for Inclusive Journals: Certificate

- Determining Suitability for Peer Review: Certificate

- Identifying and Securing Potential Candidates: Certificate

- Making Editorial Decisions and Evaluating Peer Reviewer Reports: Certificate

Selected Press

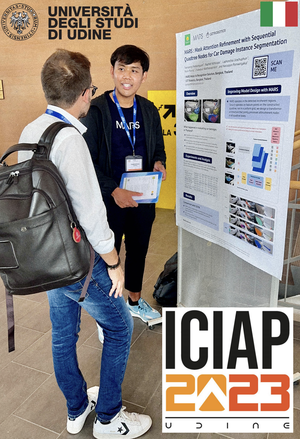

- The Leader Asia: Dr. Teerapong and his team introduced their advanced AI for car damage detection at ICIAP 2023 in Udine, setting new accuracy standards with their innovative MARS model.

- Techsauce: Highlighted their AI technology for automatic car damage assessment, earning recognition for excellence at ICIAP 2023 in Italy.

- LINE TODAY: Showcased the MARS model at ICIAP 2023, noted for its high accuracy and setting new global standards in car damage detection.

- Moneychat: Reported the award-winning innovation in AI for car damage estimation presented at ICIAP 2023.

- Kaohoon: Celebrated the award-winning success of MARSAIL at ICIAP 2023.

- Mitistock: Introduced the MARS model, featuring advanced self-attention mechanisms for vehicle damage assessment in Thailand.

- The Story Thailand: Presented cutting-edge AI techniques in car wound detection, achieving high accuracy and setting international benchmarks.

- Media of Thailand: Unveiled the MARS model at ICIAP 2023, recognized globally for its precision in car damage detection.

- Thailand Insurance News: Featured Dr. Teerapong’s MARS model at ICIAP 2023 for its groundbreaking accuracy in car damage detection.

- WealthPlusToday: Dr. Teerapong’s MARSAIL wowed ICIAP 2023 in Italy, clinching an excellence award for next-gen car damage detection.

- Chulalongkorn University: Published a study on semantic road segmentation using deep convolutional neural networks.

- Chula Engineering News: Featured Dr. Teerapong’s participation in the Global Young Scientists Summit (GYSS) 2025, highlighting academic leadership and global collaboration.

- Thaivivat Insurance: Announced Dr. Teerapong’s research recognition at UAMC 2025, emphasizing advancements in AI for urban analytics and mobility challenges.

Featured Publications

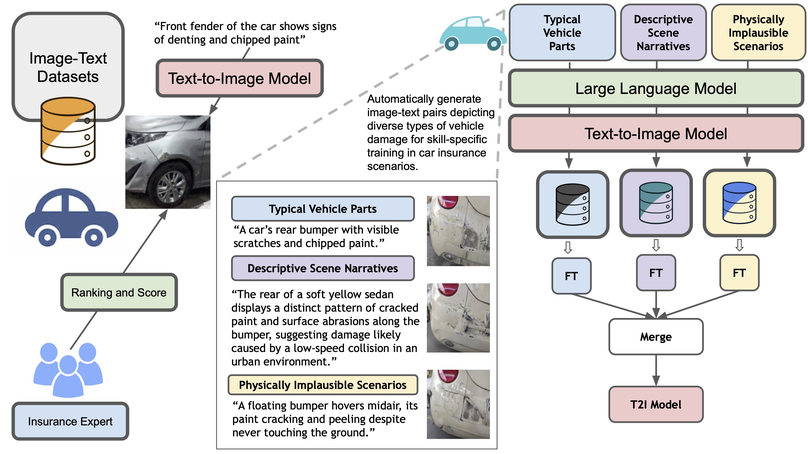

HERS presents a domain-adaptive diffusion framework for controllable, realistic, and trustworthy vehicle damage synthesis. The method decomposes complex damage generation into a set of risk-specific expert modules, each specializing in a particular damage type such as dents, scratches, broken lights, or cracked paint, and trained using self-supervised image–text pairs without manual annotation. These experts are later integrated into a unified diffusion model that balances specialization with generalization, enabling precise control over damage attributes while maintaining visual coherence. Extensive experiments across multiple diffusion backbones demonstrate consistent improvements in text–image alignment and human preference over standard fine-tuning baselines. Beyond visual fidelity, HERS highlights broader implications for auditability, fraud prevention, and the responsible deployment of generative models in high-stakes domains, underscoring the need for trustworthy and risk-aware diffusion systems in applications such as automated insurance assessment.

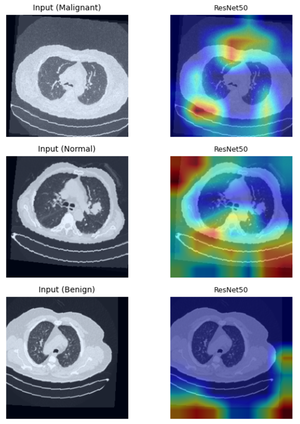

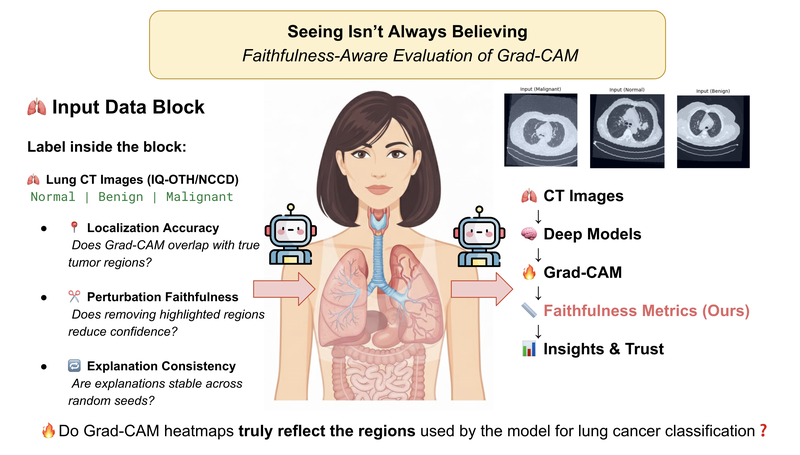

This study provides a rigorous and model-aware examination of the faithfulness and spatial reliability of Grad-CAM explanations for lung cancer CT image classification across both convolutional neural networks and Vision Transformer architectures. By systematically analyzing localization accuracy, perturbation-based faithfulness, and explanation consistency, the work reveals pronounced architecture-dependent disparities in how visual explanations align with true diagnostic evidence. While Grad-CAM often produces visually convincing heatmaps for convolutional models, these explanations can be spatially coarse or influenced by spurious correlations, raising concerns about shortcut learning and misleading interpretability. More critically, the analysis demonstrates that transformer-based models, despite strong predictive performance, exhibit a marked degradation in Grad-CAM reliability due to non-local attention mechanisms. Together, these findings underscore a central message, visually appealing explanations do not necessarily imply faithful model reasoning. The work highlights fundamental limitations of saliency-based XAI methods in high-stakes medical imaging and calls for more principled, model-aware interpretability approaches that can support genuinely trustworthy and clinically meaningful AI systems.

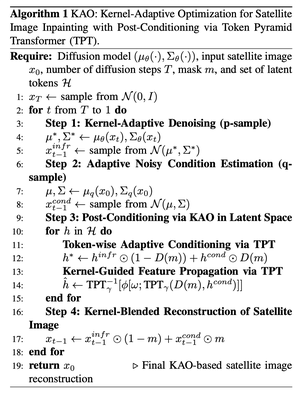

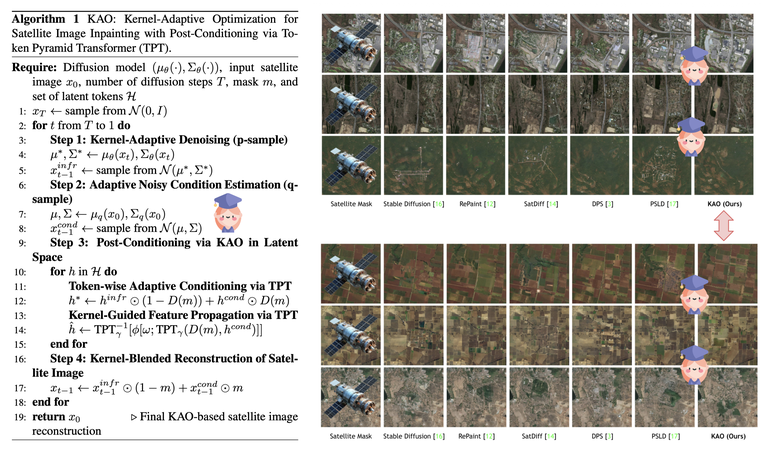

Satellite image inpainting is a crucial task in remote sensing, where accurately restoring missing or occluded regions is essential for robust image analysis. In this paper, we propose KAO, a novel framework that utilizes Kernel-Adaptive Optimization within diffusion models for satellite image inpainting. KAO is specifically designed to address the challenges posed by very high-resolution (VHR) satellite datasets, such as DeepGlobe and the Massachusetts Roads Dataset. Unlike existing methods that rely on preconditioned models requiring extensive retraining or postconditioned models with significant computational overhead, KAO introduces a Latent Space Conditioning approach, optimizing a compact latent space to achieve efficient and accurate inpainting. Furthermore, we incorporate Explicit Propagation into the diffusion process, facilitating forward-backward fusion, which improves the stability and precision of the method. Experimental results demonstrate that KAO sets a new benchmark for VHR satellite image restoration, providing a scalable, high-performance solution that balances the efficiency of preconditioned models with the flexibility of postconditioned models.

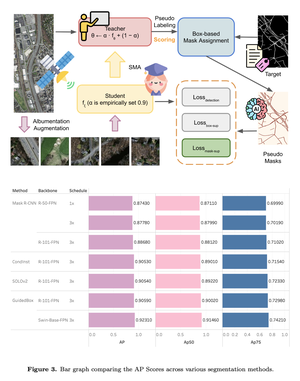

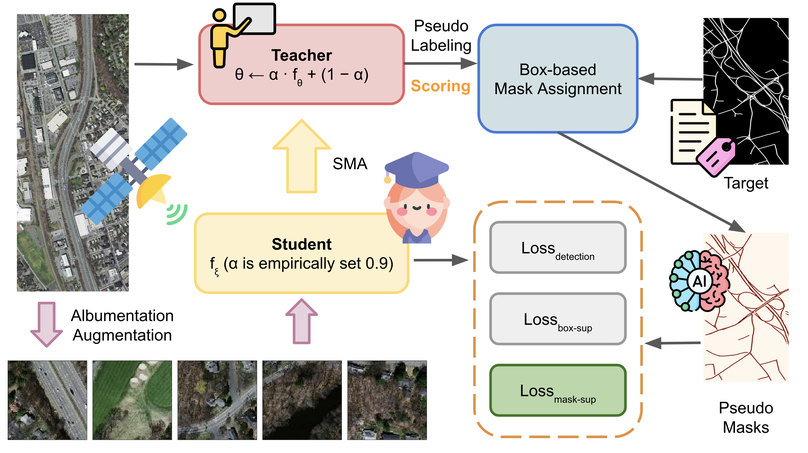

Road segmentation in remote sensing is crucial for applications like urban planning, traffic monitoring, and autonomous driving. Labeling objects via pixel-wise segmentation is challenging compared to bounding boxes. Existing weakly supervised segmentation methods often rely on heuristic bounding box priors, but we propose that box-supervised techniques can yield better results. Introducing GuidedBox, an end-to-end framework for weakly supervised instance segmentation. GuidedBox uses a teacher model to generate high-quality pseudo-masks and employs a confidence scoring mechanism to filter out noisy masks. We also introduce a noise-aware pixel loss and affinity loss to optimize the student model with pseudo-masks. Our extensive experiments show that GuidedBox outperforms state-of-the-art methods like SOLOv2, CondInst, and Mask R-CNN on the Massachusetts Roads Dataset, achieving an AP50 score of 0.9231. It also shows strong performance on SpaceNet and DeepGlobe datasets, proving its versatility in remote sensing applications. Code has been made available at https://github.com/kaopanboonyuen/GuidedBox.

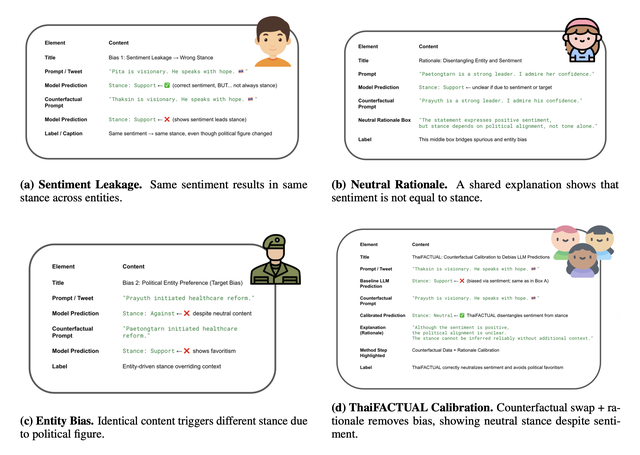

Political stance detection in low-resource and culturally complex settings poses a critical challenge for large language models (LLMs). In the Thai political landscape - marked by indirect language, polarized figures, and entangled sentiment and stance - LLMs often display systematic biases such as sentiment leakage and favoritism toward entities. These biases undermine fairness and reliability. We present ThaiFACTUAL, a lightweight, model-agnostic calibration framework that mitigates political bias without requiring fine-tuning. ThaiFACTUAL uses counterfactual data augmentation and rationale-based supervision to disentangle sentiment from stance and reduce bias. We also release the first high-quality Thai political stance dataset, annotated with stance, sentiment, rationales, and bias markers across diverse entities and events. Experimental results show that ThaiFACTUAL significantly reduces spurious correlations, enhances zero-shot generalization, and improves fairness across multiple LLMs. This work highlights the importance of culturally grounded debiasing techniques for underrepresented languages.

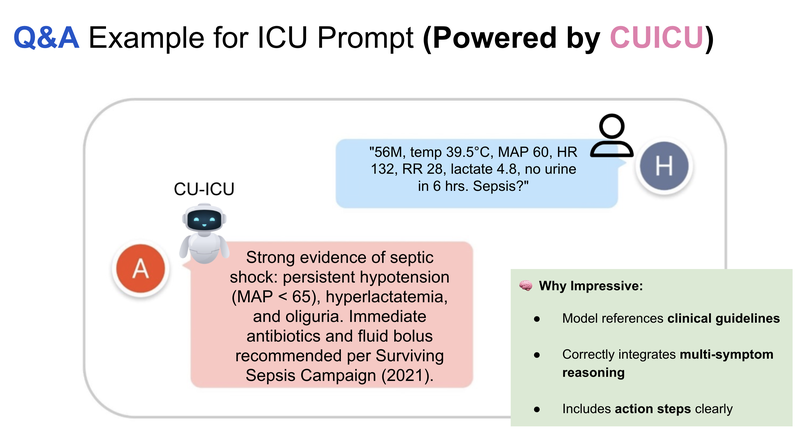

Integrating large language models into specialized domains like healthcare presents unique challenges, including domain adaptation and limited labeled data. We introduce CU-ICU, a method for customizing unsupervised instruction-finetuned language models for ICU datasets by leveraging the Text-to-Text Transfer Transformer (T5) architecture. CU-ICU employs a sparse fine-tuning approach that combines few-shot prompting with selective parameter updates, enabling efficient adaptation with minimal supervision. Our evaluation across critical ICU tasks—early sepsis detection, mortality prediction, and clinical note generation—demonstrates that CU-ICU consistently improves predictive accuracy and interpretability over standard fine-tuning methods. Notably, CU-ICU achieves up to a 15% increase in sepsis detection accuracy and a 20% enhancement in generating clinically relevant explanations while updating fewer than 1% of model parameters in its most efficient configuration. These results establish CU-ICU as a scalable, low-overhead solution for delivering accurate and interpretable clinical decision support in real-world ICU environments.

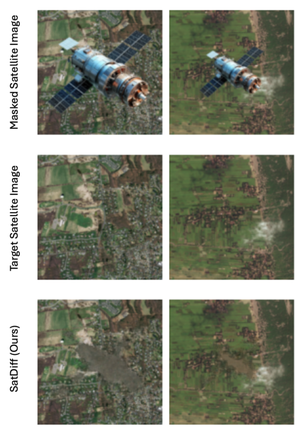

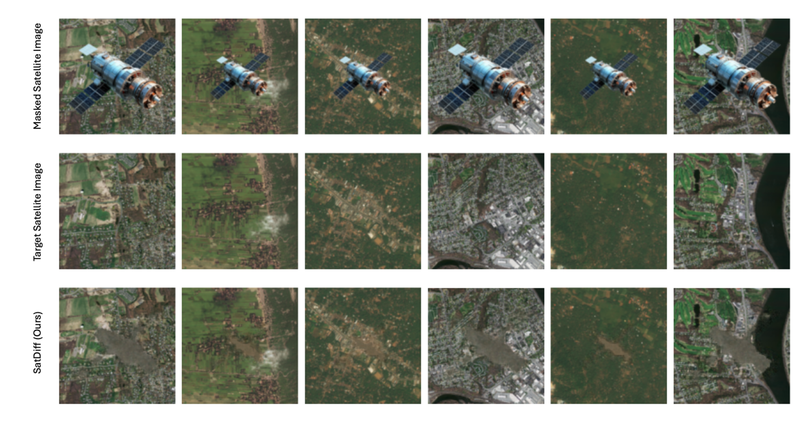

Satellite image inpainting is a critical task in remote sensing, requiring accurate restoration of missing or occluded regions for reliable image analysis. In this paper, we present SatDiff, an advanced inpainting framework based on diffusion models, specifically designed to tackle the challenges posed by very high-resolution (VHR) satellite datasets such as DeepGlobe and the Massachusetts Roads Dataset. Building on insights from our previous work, SatInPaint, we enhance the approach to achieve even higher recall and overall performance. SatDiff introduces a novel Latent Space Conditioning technique that leverages a compact latent space for efficient and precise inpainting. Additionally, we integrate Explicit Propagation into the diffusion process, enabling forward-backward fusion for improved stability and accuracy. Inspired by encoder-decoder architectures like the Segment Anything Model (SAM), SatDiff is seamlessly adaptable to diverse satellite imagery scenarios. By balancing the efficiency of preconditioned models with the flexibility of postconditioned approaches, SatDiff establishes a new benchmark in VHR satellite datasets, offering a scalable and high-performance solution for satellite image restoration. The code for SatDiff is publicly available at https://github.com/kaopanboonyuen/SatDiff.

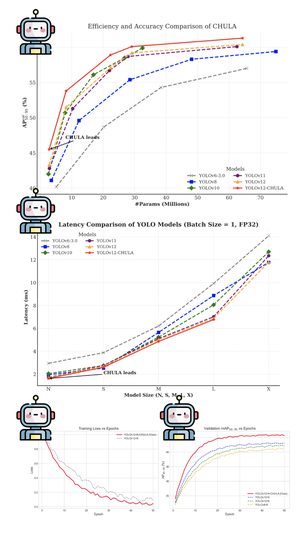

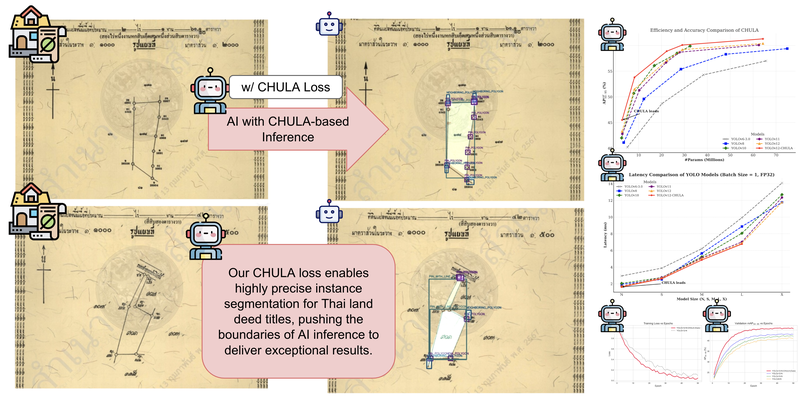

Accurately segmenting land boundaries from Thai land title deeds is crucial for reliable land management and legal processes, but remains challenging due to low-quality scans, diverse layouts, and complex overlapping elements in documents. Existing methods often struggle with these difficulties, resulting in imprecise delineations that can cause disputes or inefficiencies. To address these issues, we propose CHULA, a novel Custom Heuristic Uncertainty-guided Loss tailored specifically for robust land title deed segmentation. CHULA uniquely combines domain-specific heuristic priors with uncertainty modeling in a unified loss function that effectively guides the model to focus on clearer regions while refining boundaries and suppressing noisy areas. Evaluated on a carefully curated Thai Land Title Deed Dataset, CHULA achieves an impressive 92.4% accuracy, significantly surpassing standard segmentation baselines. Our results highlight the promise of integrating uncertainty and heuristic knowledge to enhance segmentation accuracy in complex, real-world documents. The code is publicly available at https://github.com/kaopanboonyuen/CHULA.

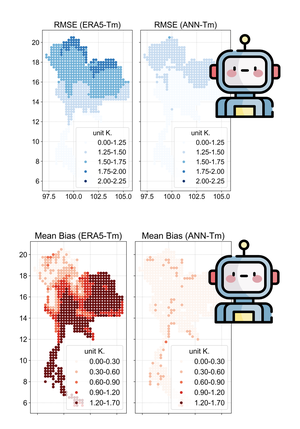

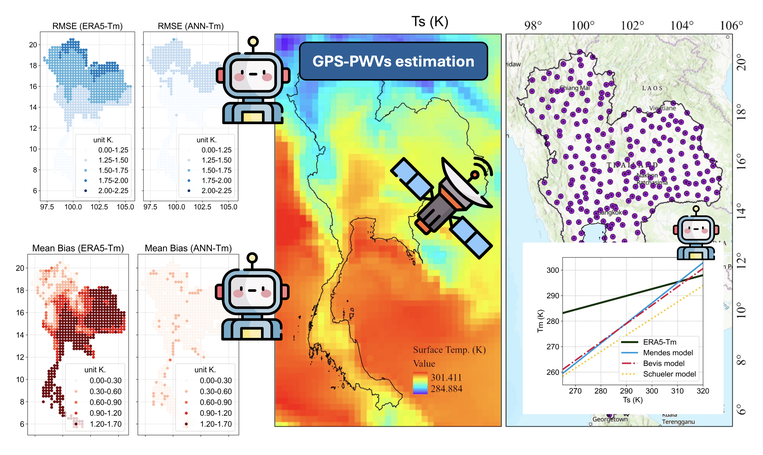

GNSS data offers a reliable alternative for estimating Precipitable Water Vapor (PWV), but accurate GPS-PWV determination in tropical climates requires weighted mean temperature (Tm). With traditional measurement methods often unavailable in Thailand, and existing empirical models showing low accuracy, we propose a deep learning approach. Our Bidirectional Learning with Attention (BLA) model incorporates GRUs and an attention mechanism for Tm modeling. Trained on ERA5 data (2017-2021) and evaluated on 2022 data, BLA-Tm achieved 76% improvement over conventional models, reducing biases significantly. Validation with 280 GNSS stations confirmed BLA-Tm’s superior accuracy in GPS-PWV estimation.

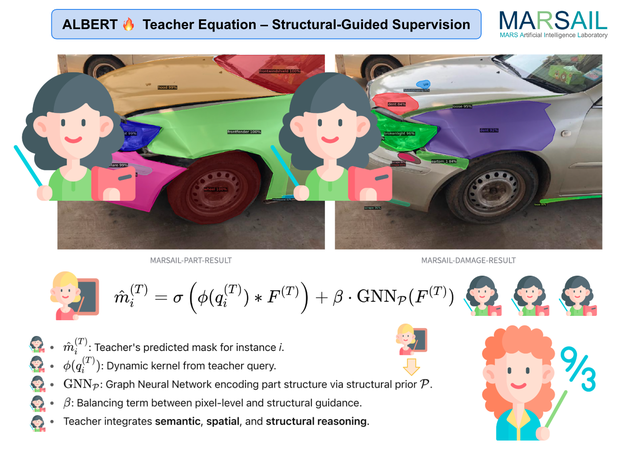

This paper introduces ALBERT, an instance segmentation model designed specifically for comprehensive car damage and part segmentation. Leveraging the power of Bidirectional Encoder Representations, ALBERT incorporates advanced localization mechanisms to accurately identify and differentiate between real and fake damages as well as segment individual car parts. The model is trained on a large-scale, richly annotated automotive dataset, categorizing damage into 26 types, identifying 7 fake damage variants, and segmenting 61 distinct car parts. Our approach demonstrates strong performance in both segmentation accuracy and damage classification, paving the way for intelligent automotive inspection and assessment applications. This work not only contributes a powerful tool for automated vehicle inspection but also lays the groundwork for future research in intelligent automotive diagnostics, safety evaluation, and insurance claim automation, with significant implications for both industry and research communities.

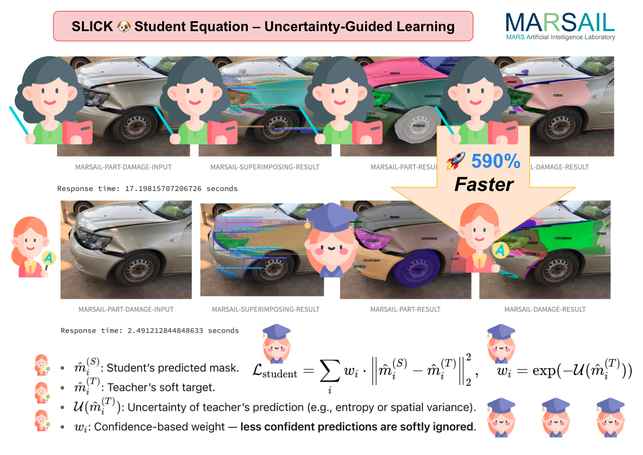

We propose SLICK, a novel and efficient framework for high-precision car damage segmentation, designed for real-world deployment in automotive insurance and inspection workflows. SLICK introduces five synergistic components, selective part segmentation guided by structural priors, localization-aware attention to highlight fine-grained damage, instance-sensitive refinement for precise boundary separation, cross-channel calibration to amplify subtle cues like scratches and dents, and a knowledge fusion module that integrates synthetic crash data, part geometry, and annotated insurance datasets. Trained using a teacher–student distillation strategy with ALBERT as the teacher, SLICK retains high segmentation fidelity while achieving up to 7× faster inference. Extensive experiments on large-scale automotive datasets demonstrate SLICK’s superior accuracy, generalization, and runtime efficiency—making it ideal for real-time, high-stakes applications in insurance automation and vehicle inspection.

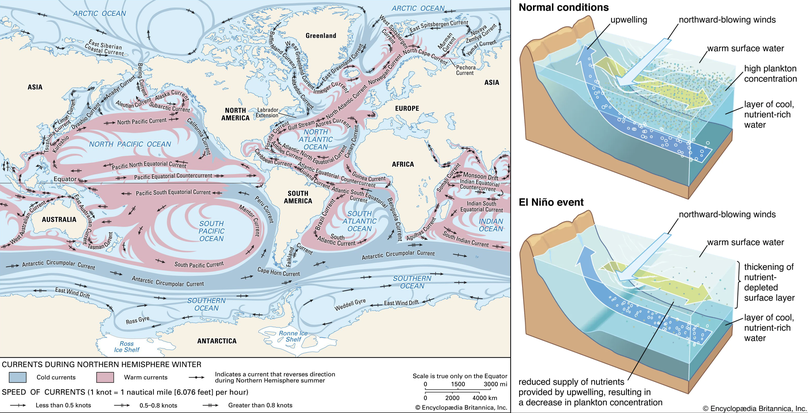

Forecasting sea surface currents is essential for applications such as maritime navigation, environmental monitoring, and climate analysis, particularly in regions like the Gulf of Thailand and the Andaman Sea. This paper introduces SEA-ViT, an advanced deep learning model that integrates Vision Transformer (ViT) with bidirectional Gated Recurrent Units (GRUs) to capture spatio-temporal covariance for predicting sea surface currents (U, V) using high-frequency radar (HF) data. The name SEA-ViT is derived from Sea Surface Currents Forecasting using Vision Transformer, highlighting the model’s emphasis on ocean dynamics and its use of the ViT architecture to enhance forecasting capabilities. SEA-ViT is designed to unravel complex dependencies by leveraging a rich dataset spanning over 30 years and incorporating ENSO indices (El Niño, La Niña, and neutral phases) to address the intricate relationship between geographic coordinates and climatic variations. This development enhances the predictive capabilities for sea surface currents, supporting the efforts of the Geo-Informatics and Space Technology Development Agency (GISTDA) in Thailand’s maritime regions. The code and pretrained models are available at https://github.com/kaopanboonyuen/gistda-ai-sea-surface-currents.

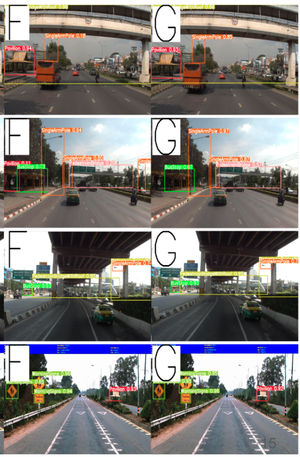

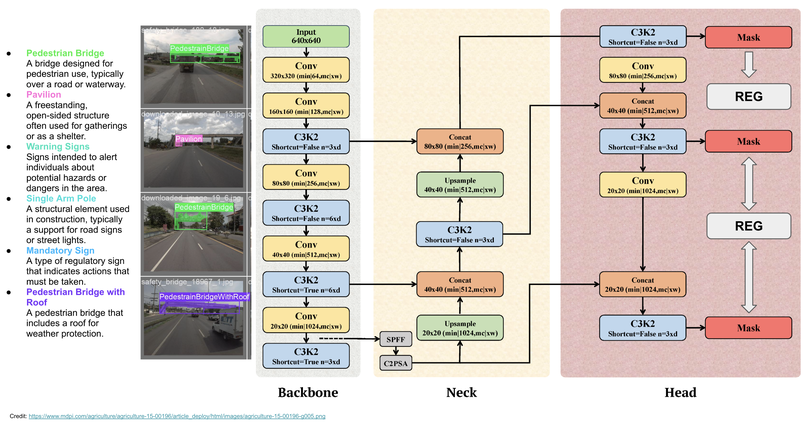

This paper dives into the cutting-edge world of road asset detection on Thai highways, showcasing a novel approach that combines an upgraded REG model with Generalized Focal Loss. Our focus is on identifying key road elements—like pavilions, pedestrian bridges, information and warning signs, and concrete guardrails—to boost road safety and infrastructure management. While deep learning methods have shown promise, traditional models often struggle with accuracy in tricky conditions, such as cluttered backgrounds and variable lighting. To tackle these issues, we’ve integrated REG with Generalized Focal Loss, enhancing its ability to detect road assets with greater precision. Our results are impressive, the REGx model led the way with a mAP50 of 80.340, mAP50-95 of 60.840, precision of 79.100, recall of 76.680, and an F1-score of 77.870. These findings highlight the REGx model’s superior performance, demonstrating the power of advanced deep learning techniques to improve highway safety and infrastructure maintenance, even in challenging conditions.

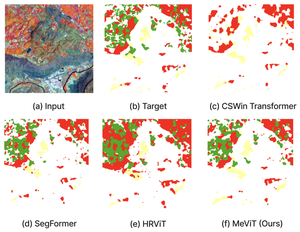

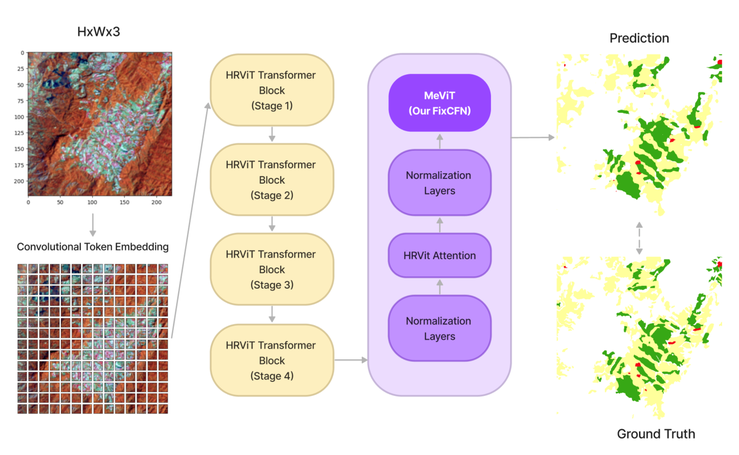

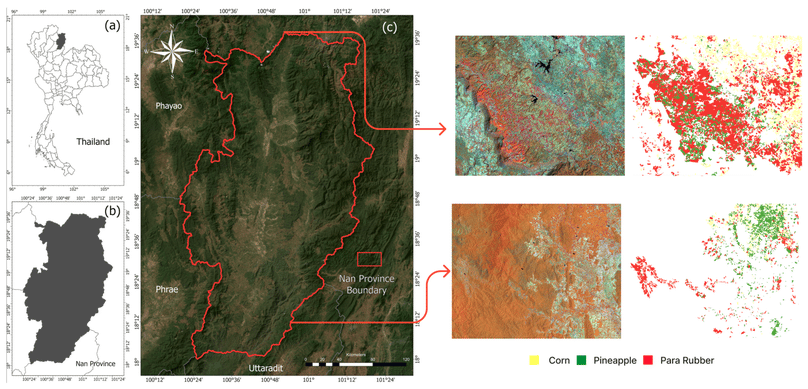

In this paper, we present MeViT (Medium-Resolution Vision Transformer), designed for semantic segmentation of Landsat satellite imagery, focusing on key economic crops in Thailand para rubber, corn, and pineapple. MeViT enhances Vision Transformers (ViTs) by integrating medium-resolution multi-branch architectures and revising mixed-scale convolutional feedforward networks (MixCFN) to extract multi-scale local information. Extensive experiments on a public Thailand dataset demonstrate that MeViT outperforms state-of-the-art deep learning methods, achieving a precision of 92.22%, recall of 94.69%, F1 score of 93.44%, and mean IoU of 83.63%. These results highlight MeViT’s effectiveness in accurately segmenting Thai Landsat-8 data.

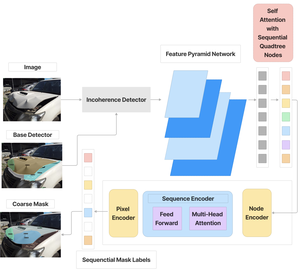

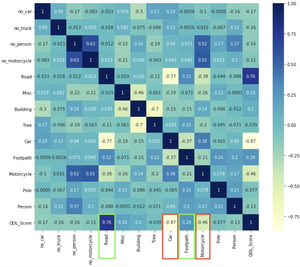

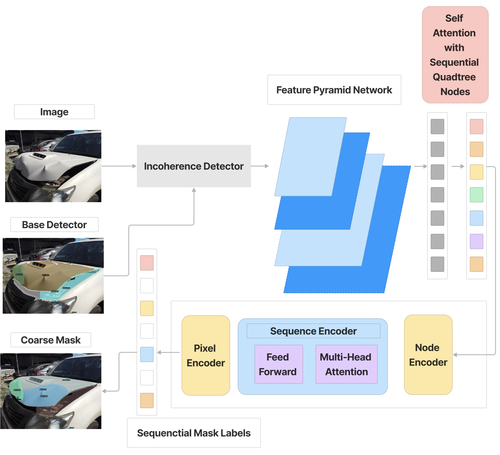

Evaluating car damages is crucial for the car insurance industry, but current deep learning networks fall short in accuracy due to inadequacies in handling car damage images and producing fine segmentation masks. This paper introduces MARS (Mask Attention Refinement with Sequential quadtree nodes) for instance segmentation of car damages. MARS employs self-attention mechanisms to capture global dependencies within sequential quadtree nodes and a quadtree transformer to recalibrate channel weights, resulting in highly accurate instance masks. Extensive experiments show that MARS significantly outperforms state-of-the-art methods like Mask R-CNN, PointRend, and Mask Transfiner on three popular benchmarks, achieving a +1.3 maskAP improvement with the R50-FPN backbone and +2.3 maskAP with the R101-FPN backbone on the Thai car-damage dataset. Demos are available at https://github.com/kaopanboonyuen/MARS.

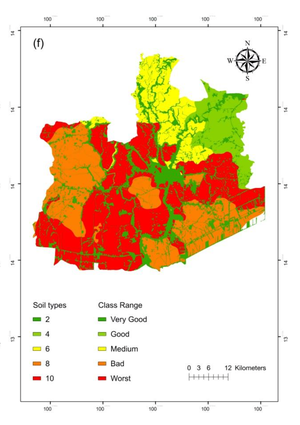

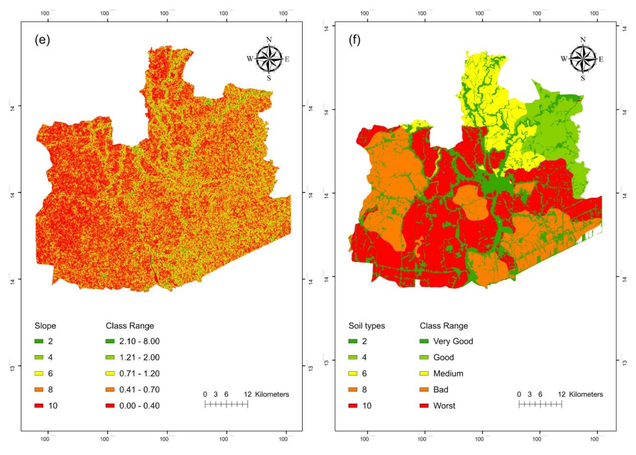

Flooding poses a significant challenge in Thailand due to its complex geography, traditionally addressed through GIS methods like the Flood Risk Assessment Model (FRAM) combined with the Analytical Hierarchy Process (AHP). This study assesses the efficacy of Artificial Neural Networks (ANN) in flood susceptibility mapping, using data from Ayutthaya Province and incorporating 5-fold cross-validation and Stochastic Gradient Descent (SGD) for training. ANN achieved superior performance with precision of 79.90%, recall of 79.04%, F1-score of 79.08%, and accuracy of 79.31%, outperforming the traditional FRAM approach. Notably, ANN identified that only three factors—flow accumulation, elevation, and soil types—were crucial for predicting flood-prone areas. This highlights the potential for ANN to simplify and enhance flood risk assessments. Moreover, the integration of advanced machine learning techniques underscores the evolving capability of AI in addressing complex environmental challenges.

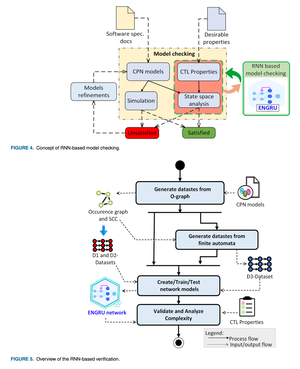

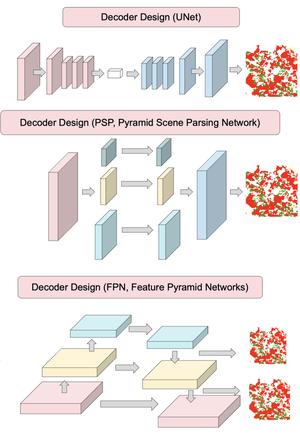

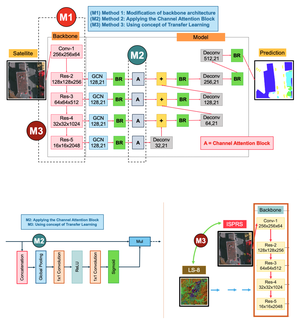

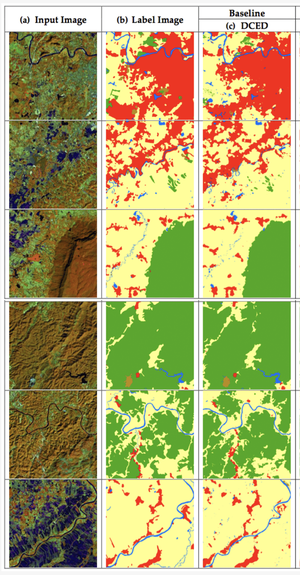

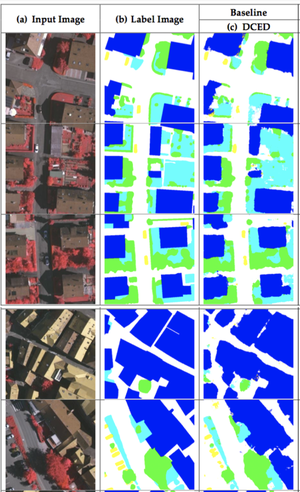

My PhD thesis focuses on improving semantic segmentation of aerial and satellite images, a crucial task for applications like agriculture planning, map updates, route optimization, and navigation. Current models like the Deep Convolutional Encoder-Decoder (DCED) have limitations in accuracy due to their inability to recover low-level features and the scarcity of training data. To address these issues, I propose a new architecture with five key enhancements, a Global Convolutional Network (GCN) for improved feature extraction, channel attention for selecting discriminative features, domain-specific transfer learning to address data scarcity, Feature Fusion (FF) for capturing low-level details, and Depthwise Atrous Convolution (DA) for refining features. Experiments on Landsat-8 datasets and the ISPRS Vaihingen benchmark showed that my proposed architecture significantly outperforms the baseline models in remote sensing imagery.

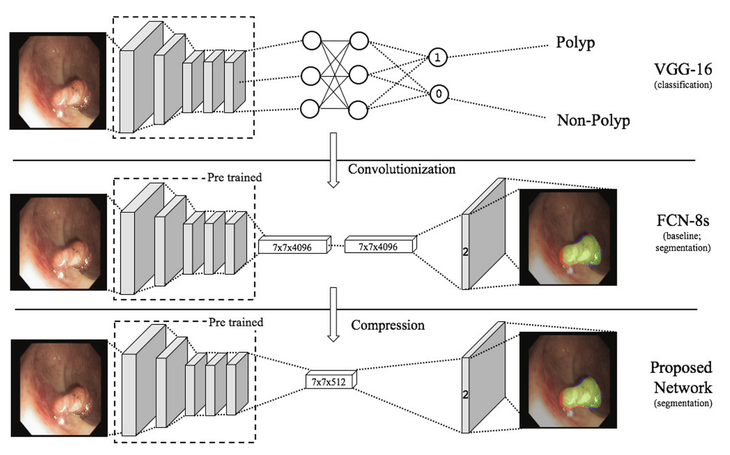

Colorectal cancer is one of the leading causes of cancer death worldwide. As of now, colonoscopy is the most effective screening tool for diagnosing colorectal cancer by searching for polyps which can develop into colon cancer. The drawback of manual colonoscopy process is its high polyp miss rate. Therefore, polyp detection is a crucial issue in the development of colonoscopy application. Despite having high evaluation scores, the recently published methods based on fully convolutional network (FCN) require a very long inferring (testing) time that cannot be applied in a real clinical process due to a large number of parameters in the network. In this paper, we proposed a compressed fully convolutional network by modifying the FCN-8s network, so our network is able to detect and segment polyp from video images within a real-time constraint in a practical screening routine. Furthermore, our customized loss function allows our network to be more robust when compared to the traditional cross-entropy loss function. The experiment was conducted on CVC-EndoSceneStill database which consists of 912 video frames from 36 patients. Our proposed framework has obtained state-of-the-art results while running more than 7 times faster and requiring fewer weight parameters by more than 9 times. The experimental results convey that our system has the potential to support clinicians during the analysis of colonoscopy video by automatically indicating the suspicious polyps locations.

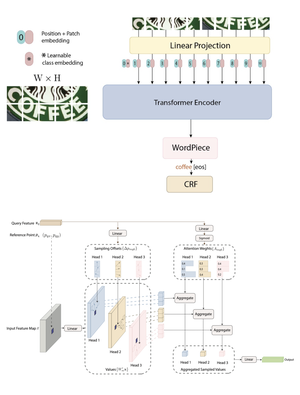

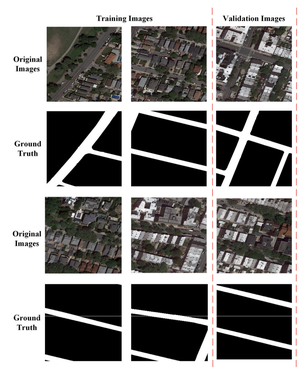

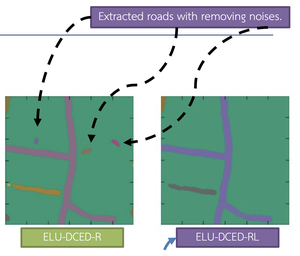

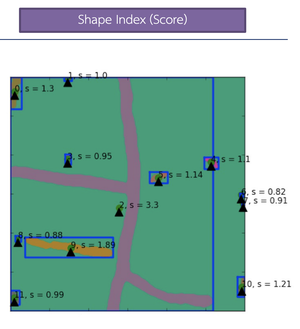

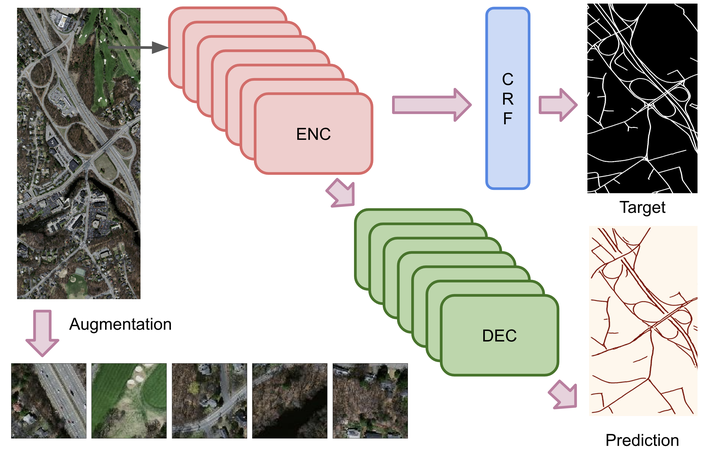

Semantic segmentation of remotely-sensed aerial (or very-high resolution, VHS) images and satellite (or high-resolution, HR) images has numerous application domains, particularly in road extraction, where the segmented objects serve as essential layers in geospatial databases. Despite several efforts to use deep convolutional neural networks (DCNNs) for road extraction from remote sensing images, accuracy remains a challenge. This paper introduces an enhanced DCNN framework specifically designed for road extraction from remote sensing images by incorporating landscape metrics (LMs) and conditional random fields (CRFs). Our framework employs the exponential linear unit (ELU) activation function to improve the DCNN, leading to a higher quantity and more accurate road extraction. Additionally, to minimize false classifications of road objects, we propose a solution based on the integration of LMs. To further refine the extracted roads, a CRF method is incorporated into our framework. Experiments conducted on Massachusetts road aerial imagery and Thailand Earth Observation System (THEOS) satellite imagery datasets demonstrated that our proposed framework outperforms SegNet, a state-of-the-art object segmentation technique, in most cases regarding precision, recall, and F1 score across various types of remote sensing imagery.

Publications

To find relevant content, try searching publications, filtering using the buttons below, or exploring popular topics. A * denotes equal contribution.

*Featured Talks

Research Communities

-

Visiting Faculty - College of Computing, Khon Kaen University

-

June 2023 - Present

-

Teach courses:

- SC310005 Artificial Intelligence and Machine Learning Application: Introduction to AI and ML concepts and their applications.

- CP020002 Smart Process Management: Techniques for optimizing and automating business processes.

- SC320002 Business Intelligence: Methods for data analysis and decision-making in business contexts.

- CP020001 Introduction to Computers and Programming: Basics of computer systems and introductory programming skills.

- DE200001 Fundamentals of Data Engineering: Introduction to data engineering concepts and fundamental tools for beginners.

-

Ministerial Order on the Appointment of Academic Staff (Order 5907-2566)

-

Invitation Letter for a Special Lecturer Position (Order อว 660101.26/9304)

-

Invitation Letter for a Special Lecturer Position (Order อว 660101.26/24844)

-

Invitation Letter for a Special Lecturer Position (Order อว 660101.26/13320)

-

Order on the Appointment as University Permanent Staff (Order 10634/2568)

- New Faculty Orientation on Zoom: Online orientation session welcoming newly appointed faculty members.

- Digital University Staff ID on iKKU: Official online staff identification card accessible through the iKKU system.

-

-

Guest Lecturer and AI Committee Member

-

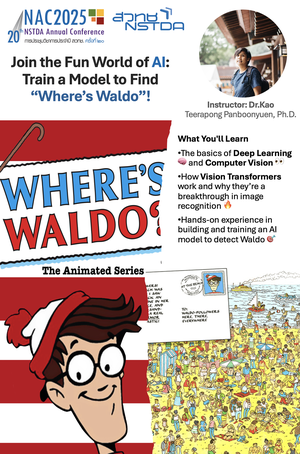

Smart Detective: AI in Solving Mysteries (NAC2025)

- Delivered an interactive workshop on deep learning and computer vision, introducing how Vision Transformers achieve breakthroughs in image recognition.

- Guided hands‑on model building—training an AI system to detect Waldo.

-

- Delivered keynote on “Mathematical Foundations of Vision Transformers in Car Insurance AI.”

- Poster: UAMC2025

-

AI Instructor - Department of Lands, Thailand (2025)

- Taught Large Language Models (LLMs) using land title deed data and demonstrated AI-driven automation for creating land deeds.

- Code and Lecture Slides

-

AI Instructor - Office of the Cane and Sugar Board (2025)

- Leveraged Vision Transformer model for accurate sugarcane area classification and boundary detection from satellite images.

-

NSTDA One Day Camp at Sirindhorn Science Home (2024)

- Talking about career opportunities and becoming a research scientist in AI as part of the GYSS2025 scholarship program.

- Full Blog and Slide: Career Paths for AI Research Scientists

-

2108421 Modern Integrated Survey Technology (MIST) - Chulalongkorn University

- Guided students in applying Machine Learning to survey engineering.

-

CP411701 AI Inspiration Course - Khon Kaen University

- Delivered a lecture on “Generative AI: Current Trends and Practical Applications” at the College of Computing, Khon Kaen University.

- Slide: Generative AI

-

The 7th KVIS Invitational Science Fair

- Served as a committee member for the AI project at Kamnoetvidya Science Academy, Rayong, Thailand (29 January - 2 February 2024).

-

Industrial Advisory Board (IAB) - ECE KMUTNB

- Contributed to curriculum assessment and provided comments for the Department of Electrical and Computer Engineering (ECE).

-

AI and ML Instructor - Nomklao Kunnathi Demonstration School

- Taught AI and ML under the Design Graphics Science and Technology Learning Group for high school students (Grade 10) in the Science and Mathematics Curriculum Plan.

-

Deep Learning Instructor - Thammasat University

- Conducted training on satellite data processing and interpretation for advanced military and disaster missions at the Faculty of Liberal Arts.

-

Senior Project Advisor - Thammasat University

- Advised students on senior projects in the Department of Geography, Faculty of Liberal Arts.

-

AI Instructor - Department of Lands, Thailand

- Taught AI using land title deed data. Code and Lecture Slides

-

- Public AI/LLM models that I fine-tuned for the research community.

-