Formal Verification

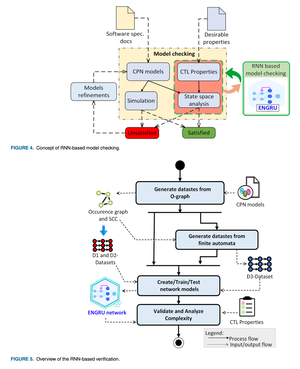

State-space graphs and automata are essential for modeling and analyzing computational systems. Recurrent neural networks (RNNs) underpin language models by processing sequential data and capturing contextual dependencies. Both RNNs and state-space graphs evaluate discrete-time systems, but their equivalence, especially in sentence structure modeling, remains unresolved. This paper introduces ENGRU (Enhanced Gated Recurrent Units), a deep learning approach for formal verification. ENGRU combines model checking, Colored Petri Nets (CPNs), and sequential learning to analyze systems abstractly. CPNs undergo state-space enumeration to generate graphs and automata, which are transformed into sequential representations for ENGRU to learn and predict system behaviors. ENGRU effectively predicts goal states in discrete-time models, aiding early bug detection and predictive state-space exploration. Experimental results show high accuracy and efficiency in goal state predictions. ENGRU’s source code is available at https://github.com/kaopanboonyuen/ENGRU.