Real-Time Polyps Segmentation for Colonoscopy Video Frames Using Compressed Fully Convolutional Network

Abstract

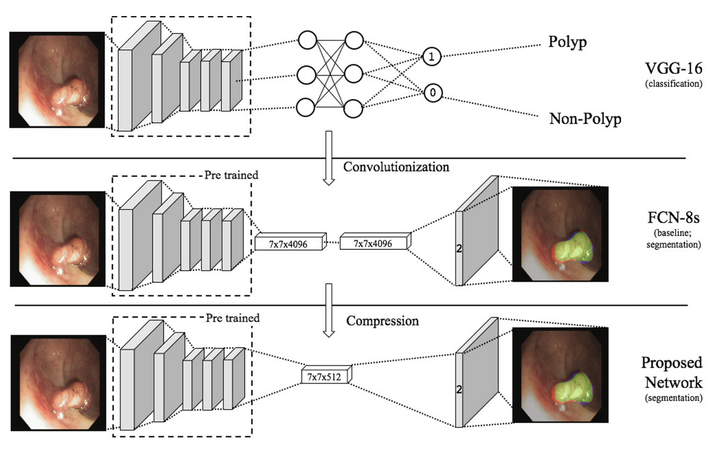

Colorectal cancer is one of the leading causes of cancer death worldwide. As of now, colonoscopy is the most effective screening tool for diagnosing colorectal cancer by searching for polyps which can develop into colon cancer. The drawback of manual colonoscopy process is its high polyp miss rate. Therefore, polyp detection is a crucial issue in the development of colonoscopy application. Despite having high evaluation scores, the recently published methods based on fully convolutional network (FCN) require a very long inferring (testing) time that cannot be applied in a real clinical process due to a large number of parameters in the network. In this paper, we proposed a compressed fully convolutional network by modifying the FCN-8s network, so our network is able to detect and segment polyp from video images within a real-time constraint in a practical screening routine. Furthermore, our customized loss function allows our network to be more robust when compared to the traditional cross-entropy loss function. The experiment was conducted on CVC-EndoSceneStill database which consists of 912 video frames from 36 patients. Our proposed framework has obtained state-of-the-art results while running more than 7 times faster and requiring fewer weight parameters by more than 9 times. The experimental results convey that our system has the potential to support clinicians during the analysis of colonoscopy video by automatically indicating the suspicious polyps locations.