Leveraging Large Language Models (LLMs) in Remote Sensing

Table of Contents

- Introduction

- Understanding Large Language Models (LLMs)

- LLMs in LULC Classification

- Case Study: LULC Classification on Sentinel-2 Imagery

- Conclusion

Introduction

Remote sensing has revolutionized the way we observe and understand the Earth’s surface. With the advent of satellites like Sentinel, Landsat-8, and THEOS, we have access to a plethora of high-resolution imagery that can be used for various applications, including Land Use/Land Cover (LULC) classification and image classification. However, analyzing and interpreting this vast amount of data is a complex task. Enter Large Language Models (LLMs), which have shown promise in various domains, including natural language processing, computer vision, and remote sensing.

In this blog, we will explore how LLMs can be applied to remote sensing, particularly in the domains of LULC and image classification. We will delve into the methodologies, algorithms, and techniques that can be utilized to harness the power of LLMs for these applications.

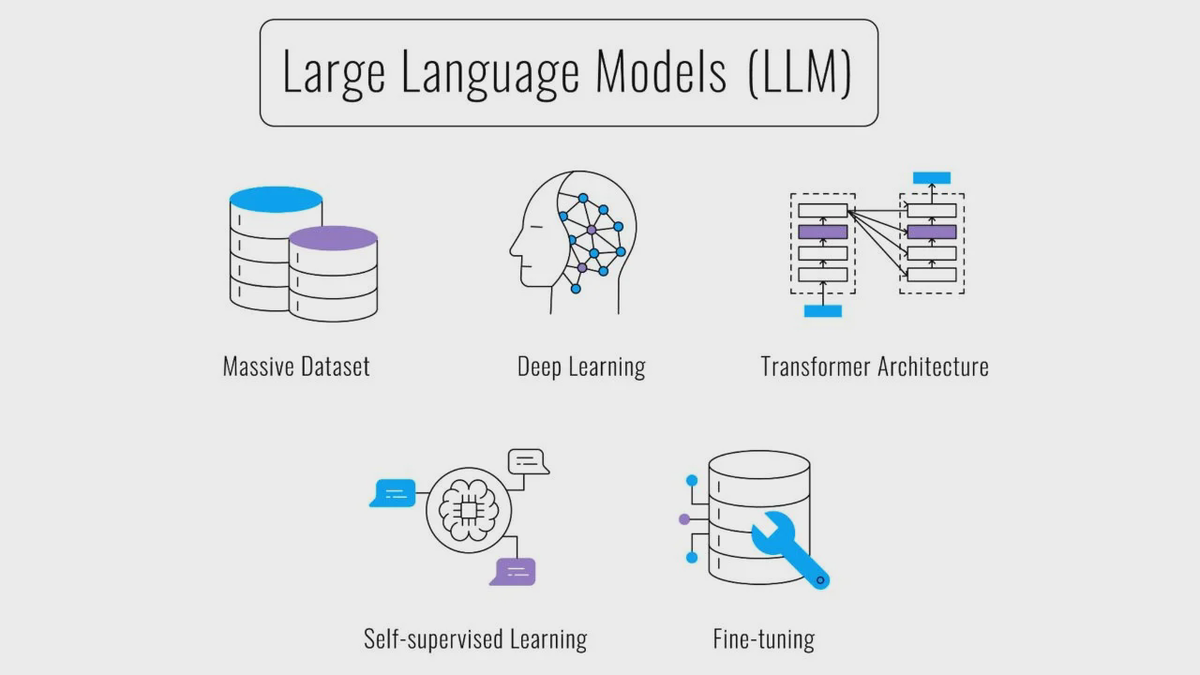

Understanding Large Language Models (LLMs)

Large Language Models, such as GPT-4, are deep learning models that have been trained on vast amounts of text data. They are capable of understanding and generating human-like text, making them highly versatile for various applications. In the context of remote sensing, LLMs can be used to analyze and interpret imagery data, aiding in tasks like LULC classification and image classification.

LLMs in LULC Classification

LULC classification involves categorizing different regions of an image into land use and land cover classes, such as forests, urban areas, water bodies, and agricultural land. Traditional methods for LULC classification include supervised and unsupervised learning techniques. LLMs, however, can enhance these methods by providing contextual understanding and improved feature extraction.

Methodology

The process of using LLMs for LULC classification can be summarized as follows:

-

Data Preprocessing:

- Collect satellite imagery from sources like Sentinel, Landsat-8, and THEOS.

- Perform image correction and normalization to ensure consistency in the data.

-

Feature Extraction:

- Use convolutional neural networks (CNNs) to extract features from the satellite images.

- Integrate LLMs to enhance feature extraction by incorporating contextual information from related text data (e.g., environmental reports, land use documentation).

-

Model Training:

- Train a classification model using the extracted features. The model can be a hybrid of CNNs and LLMs, where the CNN handles the spatial features and the LLM provides contextual understanding.

-

Classification and Validation:

- Apply the trained model to classify the satellite images into LULC categories.

- Validate the model using ground truth data and performance metrics like accuracy, precision, recall, and F1-score.

Case Study: LULC Classification on Sentinel-2 Imagery

To illustrate the application of LLMs in LULC classification, let’s consider a case study using Sentinel-2 imagery. Sentinel-2 provides high-resolution optical imagery, which is ideal for detailed LULC classification.

Data Collection and Preprocessing

We collected Sentinel-2 imagery for a region with diverse land cover types. The images were preprocessed to correct for atmospheric effects and normalize the reflectance values.

Feature Extraction

A pre-trained CNN, such as ResNet-50, was used to extract spatial features from the images. Simultaneously, a large corpus of environmental text data was fed into an LLM to extract contextual features.

Model Training and Classification

The extracted features were combined and fed into a hybrid classification model. The model was trained using labeled ground truth data. The results showed a significant improvement in classification accuracy compared to traditional methods.

Conclusion

The integration of LLMs in remote sensing, particularly for LULC and image classification, holds immense potential. By combining the spatial feature extraction capabilities of CNNs with the contextual understanding of LLMs, we can achieve more accurate and meaningful classifications. As remote sensing technology continues to evolve, the role of advanced AI models like LLMs will become increasingly crucial in unlocking new insights from satellite imagery.

In this blog, we explored the methodologies and techniques for leveraging LLMs in remote sensing applications. The case study on Sentinel-2 imagery demonstrated the practical benefits of this approach. As we move forward, further research and development in this field will undoubtedly lead to more innovative and effective solutions for remote sensing challenges.